A gentle introduction to ensemble learning

This week’s article is a review paper about ensemble learning. The idea of review papers is that they tend to be fairly thorough and give you a good idea of what’s happening in a certain field or around a certain topic.

However, this is not a machine learning blog and I doubt that many of you really need a comprehensive overview of all the various ways to apply ensemble learning. Therefore I’ll mainly focus on the main concepts. If you do want to know everything there is to know about ensemble learning, !

There are many different that can take a set of examples and produce a model that generalises those examples. Well-known algorithms include decision trees, neural networks, and linear regression.

Ensemble learning is not an algorithm per se, but refers to methods that combine multiple other machine learning algorithms to make decisions, typically in supervised machine learning tasks. The idea is that errors that are made by a single algorithm can be compensated by other algorithms, much like how wisdom of the crowd works in the following story:

While visiting a livestock fair, Galton conducted a simple weight guessing contest. The participants were asked to guess the weight of an ox. Hundreds of people participated in this contest, but no one succeeded in guessing the weight: 1,198 pounds. Much to his surprise, Galton found that the average of all guesses came quite close to the exact weight: 1,198 pounds. In this experiment, Galton revealed the power of combining many predictions in order to obtain an accurate prediction.

Anyone who’s familiar with cryptocurrencies or has been around long enough to have witnessed a stock market bubble knows that crowds are not necessarily wise. In order to achieve better results than a single decision maker, a crowd needs to meet the following criteria:

-

Independence: Each individual has their own opinions;

-

Decentralisation: Each individual is capable of specialisation and making conclusions based on local (i.e. only part of the) information;

-

Diversity of opinions: Each individual has their own private information, even if it is just a certain interpretation of known facts;

-

Aggregation: There is a way to combine all individual judgments into a collective decision.

These concepts have been explored for supervised learning since the 1970s, and eventually led to the development of the well-known algorithm in the 1990s.

What makes ensemble methods so effective? There are several reasons for that:

-

Overfitting avoidance: When a model is trained with a small amount of data, it is prone to overfitting. This means that it makes that work very well for the training data, but absolutely suck for predicting anything else. “Averaging” assumptions from different models reduces the risk of working with incorrect assumptions.

-

Computational advantage: Single models that conduct local searches may get stuck in local optima, where they think they know all the answers, but in reality only know some of them.

-

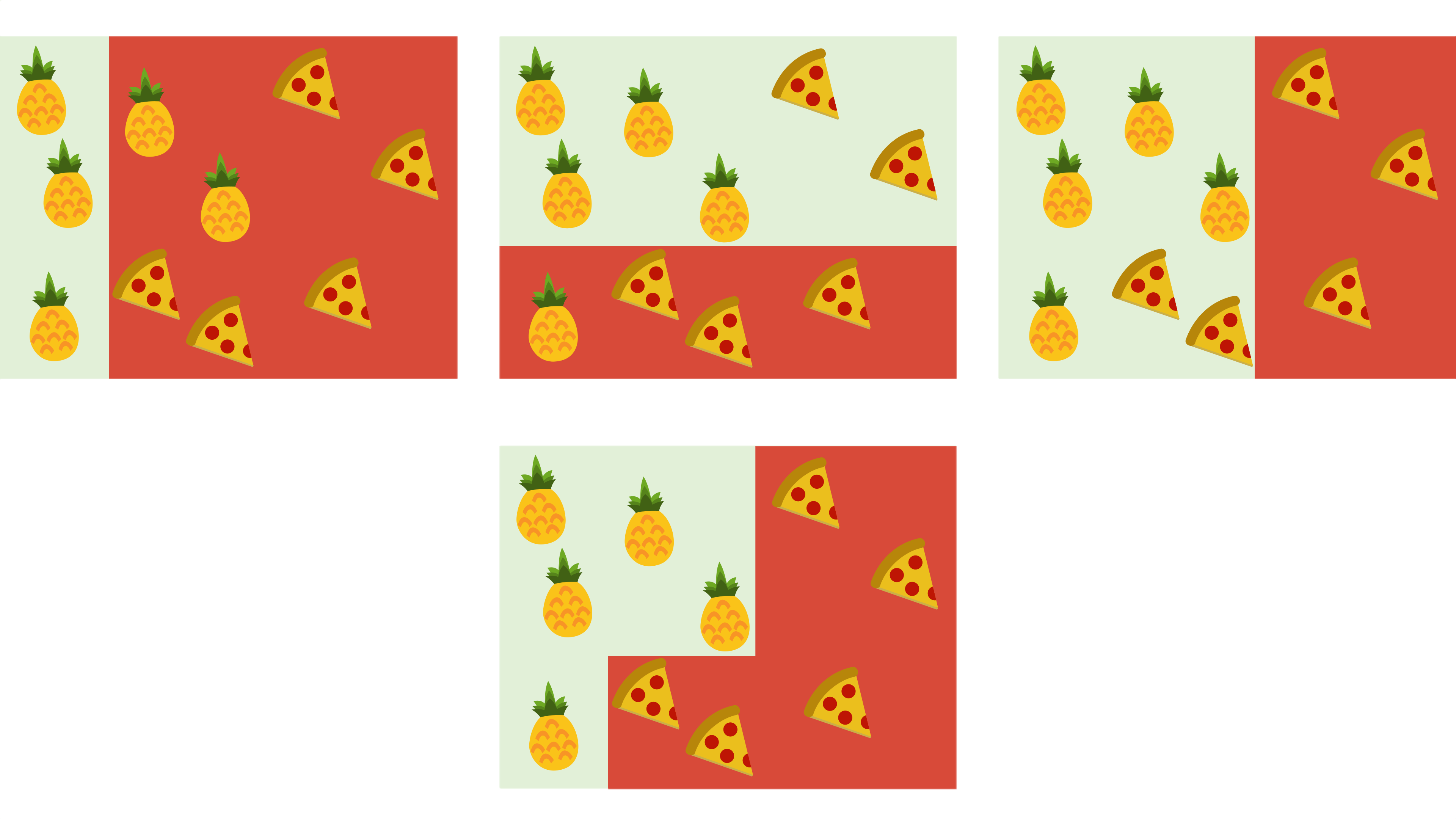

Representation: Different models may have assumptions that aren’t quite true. Combining them may lead to a more nuanced “worldview” that is more accurate. The figure below illustrates this idea. Each block in the top row represents the results of a simple binary model that’s merely okay-ish at classifying examples. The block on the bottom shows that we can obtain a perfect fit by combining the results of the three base models.

Ensemble methods are especially useful for mitigating certain challenges:

-

Class imbalance: In many machine learning problems some classes have more training examples than others. This may cause a bias towards over-represented classes. One way to solve this is by training multiple individual models using balanced subsamples of the training data.

-

Concept drift: In many real-time machine learning applications the distribution of features and labels tend to change over time. By using multiple models these changes can be taken into account, e.g. by weighing and/or dynamically creating new models when needed.

-

Curse of dimensionality: Increasing the number of features increases the search space exponentially and makes it more likely that models cannot be generalised. Methods like attribute bagging, where a model is trained using a randomly selected subset of features, make it possible to work around that issue.

When building an ensemble model two choices need to be made: a methodology for training the individual models and a process for combining the outputs of those models into a single output.

In order for an ensemble model to work well, each model’s individual performance should obviously be . Moreover, each individual model should be “diverse”. There are several ways to make sure that models are diverse:

-

Input manipulation: Each base model is trained using a different training subset. This is especially effective for situations where small changes in the training set result in a completely different model.

-

Manipulated learning algorithm: The use of each base model is altered in some way, e.g. by introducing randomness in its process or varying the learning rate of number of layers in a neural-network model.

-

Partitioning: The original dataset can be divided into smaller subsets, which are then used to train different models. With horizontal partitioning each subset contains the full range of features, but only part of the examples. With vertical partitioning , each model is trained using the same examples, but with different features.

-

Output manipulation: These are techniques that combine multiple binary classifiers into a single multi-class classifier. Error-correcting output codes (ECOC) are a good example: classes are encoded as an L-bit code word and each classifier tries to predict one of those L bits. The class whose code word is closest to the predicted value “wins”.

-

Ensemble hybridisation: This approach combines at least two strategies when building the ensemble. The random forest algorithm is the best known example of this approach.

There are several main approaches for combining base model outputs into a single output:

-

Weighting methods: Base model outputs can be combined by assigning weights to each model. For classification problems majority voting is the simplest method, while for regression problems this can be done by simply averaging all outputs. More sophisticated methods take probability or each individual model’s performance into account.

-

Meta-learning methods: Meta-learning is a process of learning from learners. With this approach, the outputs of the base models serve as inputs for a meta-learner that generates the final output. This works especially well in cases where certain base models perform differently in different subspaces. Stacking is the most popular meta-learning technique, where the meta-learner tries to determine which base models are reliable and which are not.

Ensemble methods can be divided into two categories: the dependent framework, in which individual models are daisy-chained, and the independent framework, which works more like the wisdom of the crowds example that we saw earlier.

The table below provides an overview of some of the popular methods, which you can read more about in the original article:

| Method name | Fusion method | Dependency | Training approach |

|---|---|---|---|

| AdaBoost | weighting | dependent | input manipulation |

| Bagging | weighting | independent | input manipulation |

| Random forest | weighting | independent | ensemble hybridisation |

| Random subspace methods | weighting | independent | ensemble hybridisation |

| Gradient boosting machines | weighting | dependent | output manipulation |

| Error-correcting output codes | weighting | independent | output manipulation |

| Rotation forest | weighting | independent | manipulated learning |

| Extremely randomised trees | weighting | independent | partitioning |

| Stacking | meta-learning | independent | manipulated learning |

Each method has its own strengths and weaknesses. Which method is most suitable for you therefore depends on what you want to achieve, what your users want, and possibly what other models you have already used in existing applications.

Ensemble models offer better performance in exchange for a linear(-ish) increase in computational costs. This is usually a good trade-off, but not always.

For example, an ensemble model may be quite slow at making predictions, which is not what you’d want in a real-time predictive system. What’s more, because the output of an ensemble model is created from the output of multiple other models, it’s almost impossible for users to interpret the ensemble output, which may be important in domains that require clear and rational explanations for decisions, like medicine and insurance.

These issues can be mitigated to some extent, e.g. you can prune the number of base models by only including the best-performing ones in the final ensemble model or use methods that can derive a simpler model from an ensemble of different base models.

-

Ensemble learning is a way to make multiple machine learning algorithms “work together”

-

Ensemble models offer better performance than single models, but are also more computationally expensive