COPTCHA: A Completely Original Public Turing test to tell bad Cops and Humans Apart

Systematic literature reviews can be extremely frustrating to conduct, but probably not for the reason you think. Yes, you’ll be reading tons of abstracts to weed out non-qualifying publications, but that’s still okay, and somewhat useful. Annotating and analysing publications is a lot more frustrating – but it’s also the most important part of a systematic literature review, so that makes it kind of okay I guess.

The most frustrating part happens before all these steps, when you use Google Scholar to find relevant articles or to look up .

It’s not necessarily Google Scholar itself that’s frustrating. I like Google Scholar a lot and I honestly wouldn’t know what I would do without it, because the alternatives are awful.

But if you use Google Scholar for an extended period of time – especially when you systematically click through result pages or “formulate” search queries by pasting titles from PDFs – it will very quickly start to show you a crapload of CAPTCHA’s that ask you to select all images with traffic lights, storefronts, bicycles, fire hydrants, and other mundane objects.

Eventually it doesn’t even matter how well you complete the CAPTCHA’s; Google will just keep showing you new ones, until you give up and leave.

This made me think. These CAPTCHA’s are very useful for Google, which uses them to deter bots from scraping its search engine results and to provide data that it can use to train its machine learning models. But they’re not very useful for the user, at least not directly.

What if we could use CAPTCHA’s to train users as well? For instance, if those users are frustratingly dense police officers, policy makers or “conservatives” who keep discriminating against people of colour.

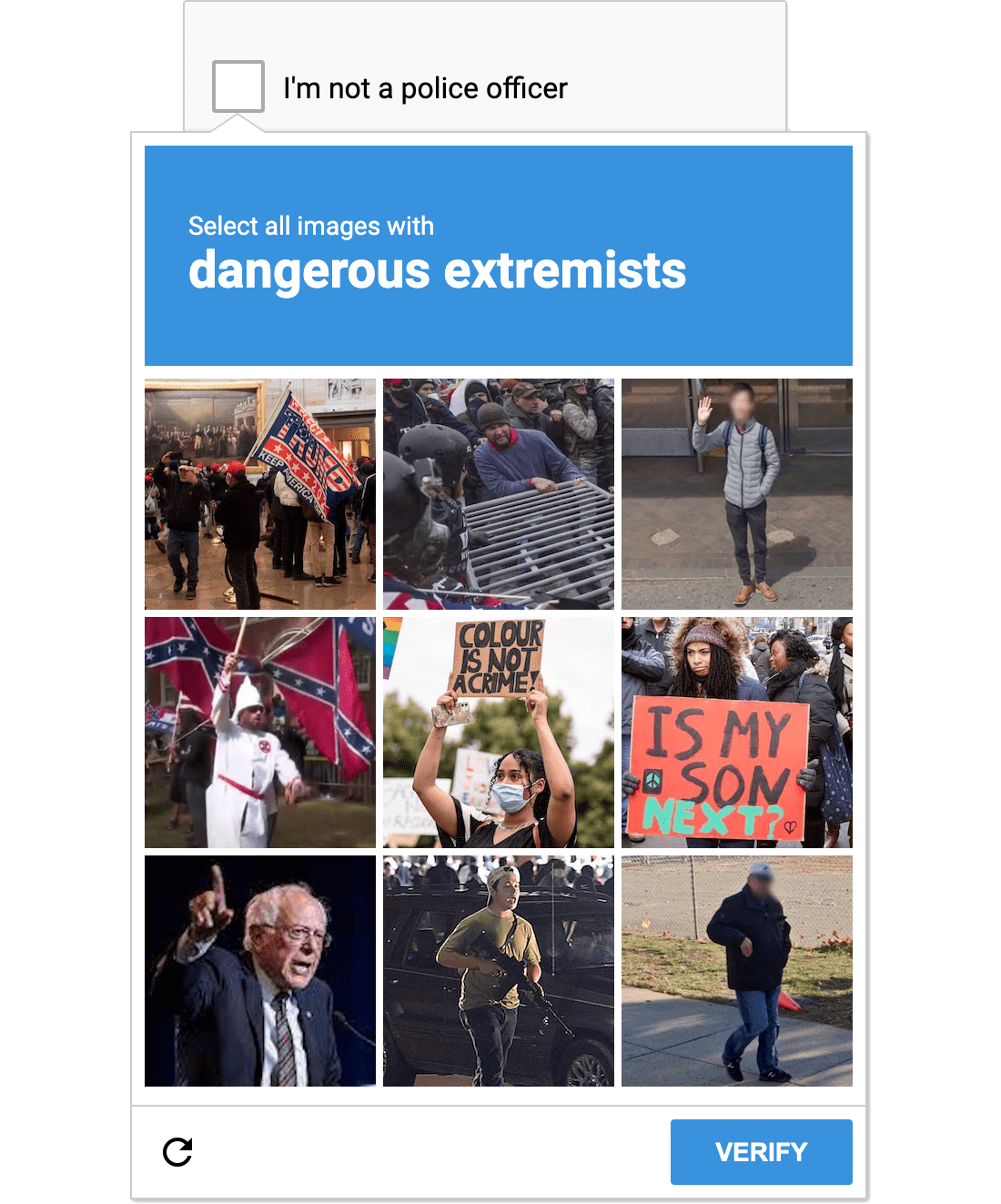

I created a proof of concept of such a CAPTCHA, called COPTCHA. COPTCHA looks a lot like Google’s reCAPTCHA, except that its challenges revolve around social issues rather than street furniture.

The screenshot below gives a rough idea of :

Casual reminder: this is just a screenshot of COPTCHA, so it doesn’t respond to clicks or taps.

Go check it out! The current version was hacked together in just a few hours and consequently doesn’t boast a very large selection of challenges yet, but more will be added over the next few months!