Migrating pods between Kubernetes nodes (without killing them)

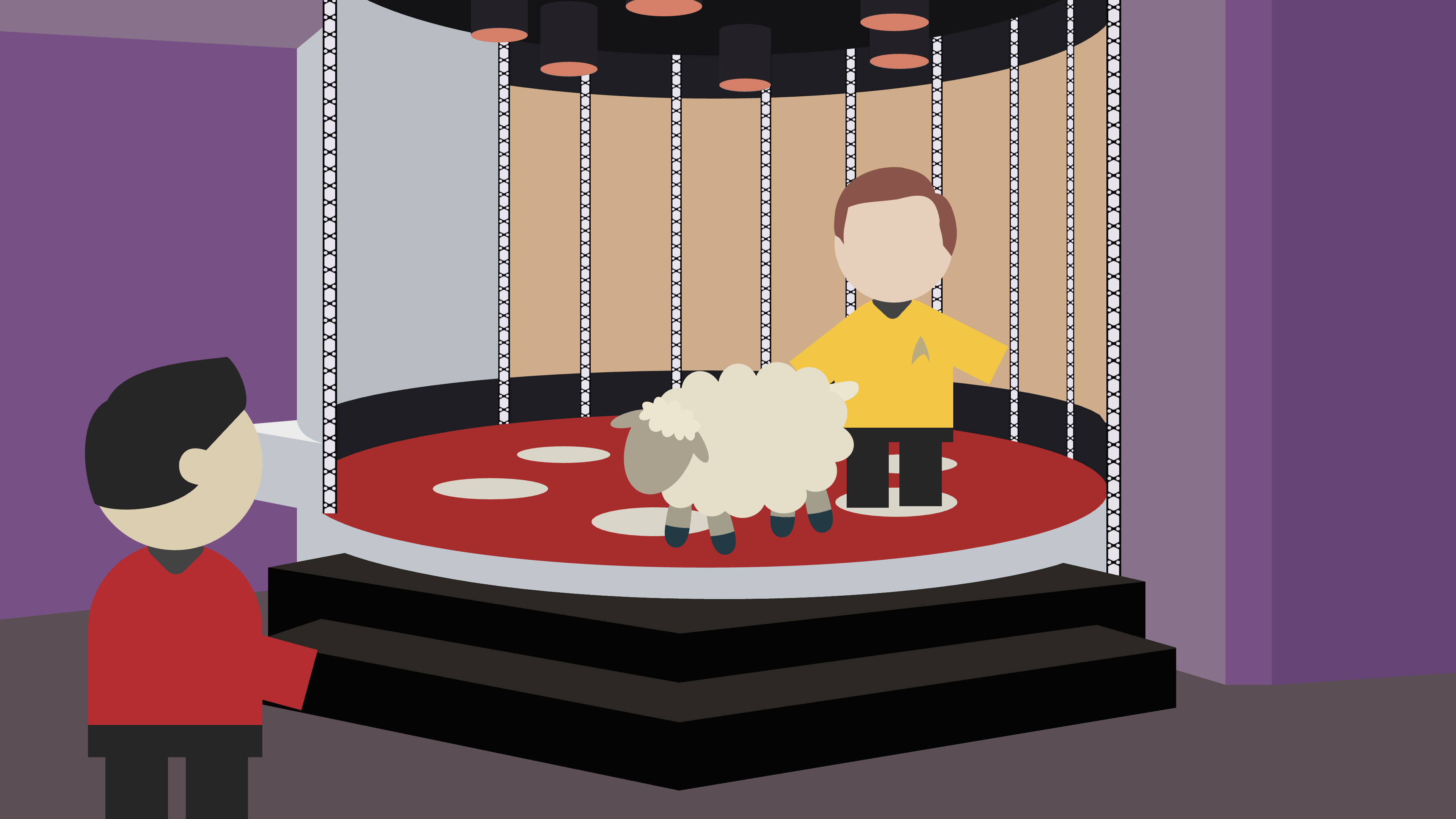

It’s often said that cloud resources should be treated as cattle rather than pets. Each resource, whether it be a machine or a container (the cattle), must be easily replaceable without affecting the overall system (the herd). For example, when Kubernetes moves pods between nodes, it does so by killing a healthy pod on the source node and creating an equivalent pod on the destination node. This design principle, when applied well, leads to improved robustness and stability.

Some engineers claim that containers must remain totally stateless

or

that any management action performed on a container turns it into a pet

.

But such claims are overzealous, because in practice we do try to care for our

cattle and maximise their usefulness. In some cases, this means that we want the

ability to move pods to different geographical locations, e.g. to reduce latency.

This paper presents a migration technique for stateful pods that actually transports a pod from one node to another without killing it, with minimal downtime.

The simplest way to migrate a running pod in Kubernetes is to stop it and deploy a new pod to a different server. This approach respects the “cattle” principle, but has two major drawbacks:

-

Unless the application immediately dumps its runtime state to disk upon receiving the SIGTERM signal, any state is lost after the migration.

-

Any open TCP connections will stop working, which may create inconsistencies between clients and the server.

Some container runtime systems, like LXD, provide support for container migration either by using built-in libraries or by relying on CRIU (Checkpoint/Restore In Userspace). CRIU is a Linux kernel module that makes it possible to snapshot and restore a container along with its state. This solves some, but not all problems, because:

-

The snapshot doesn’t contain the entire container image, but only changes that were made to the image, which must therefore already be available on the destination node.

-

The container is not available while the system creates a snapshot and transfers the state of the container.

-

CRIU only supports a small number of Linux kernel versions, which can make it harder to deploy.

-

Network connections will still be dropped unless clients are modified to automatically reconnect to the new pod.

DMTCP (Distributed MultiThreaded CheckPointing) is a user-level package for distributed applications. It solves virtually all of the issues with CRIU, as it’s not tied to specific Linux kernel versions and is capable of recreating network states.

DMTCP uses a coordinator process that wraps system calls to keep track of threads and processes, and operating system resources. This coordinator can create snapshots by dumping the memory and resources of its inner process into a gzipped file that can be unpacked on another node.

DMTCP can be used to seamlessly migrate pods to other nodes.

In a nutshell, all we need to do is stop and checkpoint the state of the source pod, transfer the checkpoint data to the destination node, and recreate the pod from the checkpoint. For this to work we need two major components: execution agents and a migration controller.

Pods must include an execution agent in each container that can start and

checkpoint processes by launching them using dmtcp_launch. Moreover, each pod

needs an additional sidecar container that runs dmtcp_coordinator.

The PodSpec definition below shows what this might look like for a DMTCP-enabled nginx pod:

(the value of START_UP) using dmtcp_launch,

and a shutdown script (./end_container) that creates a checkpoint before the

container stops.

Since the DMTCP binaries require about 200MB of disk space, they’re not included

in the image itself, but accessed via a volume mount (dmtcp-shared) that’s

shared with all containers.

The migration controller exists independently from deployed applications. It interacts with the Kubernetes API so that pods are stopped and started at the right time and coordinates migrations with execution agents by providing the following methods:

-

migrate starts a pod migration from a source node to a destination node.

-

register registers a new application container so that they can be distinguished from normal containers that are started within a pod.

-

remove removes a container and returns whether the container is used in a migration, i.e. should it create a checkpoint or can it stop immediately?

-

copy notifies the migration controller that a checkpoint is ready to be moved to another node.

The following sequence diagram shows how everything comes together when the

migrate method is called:

First, the Kubernetes API is used to . The newly created pod registers itself

with the migration controller and waits for its checkpoint.

Meanwhile, the migration controller notifies the service that new requests to the source pod (which is still running) must be routed to the target pod. This is done explicitly to reduce downtime, as automatic discovery may take up to 10–30 seconds.

The migration controller also requests a shutdown of the source pod, which

responds by calling the remove method and starts to create a checkpoint of its

state upon receiving a reply that it is part of a migration.

When the checkpoint is ready the source pod notifies the migration controller,

which then responds by copying the endpoint to the target pod.

At this point, the source pod is no longer necessary and is authorised to

terminate, while the target pod starts from the checkpoint and takes over.

This entire process comes with a little bit of downtime, that starts with the creation of the checkpoint on the source pod and ends when the checkpoint has been copied to the target pod. The actual amount depends on the size of the compressed checkpoint snapshot and available bandwidth for its transfer.

Generally speaking, downtime is only slightly worse than and considerably better than CRIU-based methods. This solution also comes with relatively little overhead, especially within the pods – although the node that runs the migration controller will require more resources for its coordination work.

-

Geo-distributed Kubernetes pod migration is feasible in a way that is fully transparent to the applications and its clients

-

The method presented in this paper causes considerably less downtime than current state-of-the-art solutions