Mapping the use of AI in counter-disinformation

Information spreads quickly on the internet, and consequently, so does disinformation. This has become a serious problem for modern societies, as traditional approaches to curtail disinformation don’t scale very well. Policymakers and media organisations increasingly see artificial intelligence (AI) as a tool that can be used to automate the detection of false or misleading information to prevent it from spreading further.

In this week’s post, we take a look at a brief research report that analyses the hyperlink citation structure of websites belonging to initiatives that use AI to fight disinformation. This is based on the idea that hyperlinks serve as proxies for social connections, which may include anything from similar viewpoints to actual collaborations.

The main goal of this study is to create a map of websites. This started with a search for pre-existing lists compiled by research or public institutions, which were filtered for relevance and activity, before crawling all pages that were at most two clicks away from the starting page.

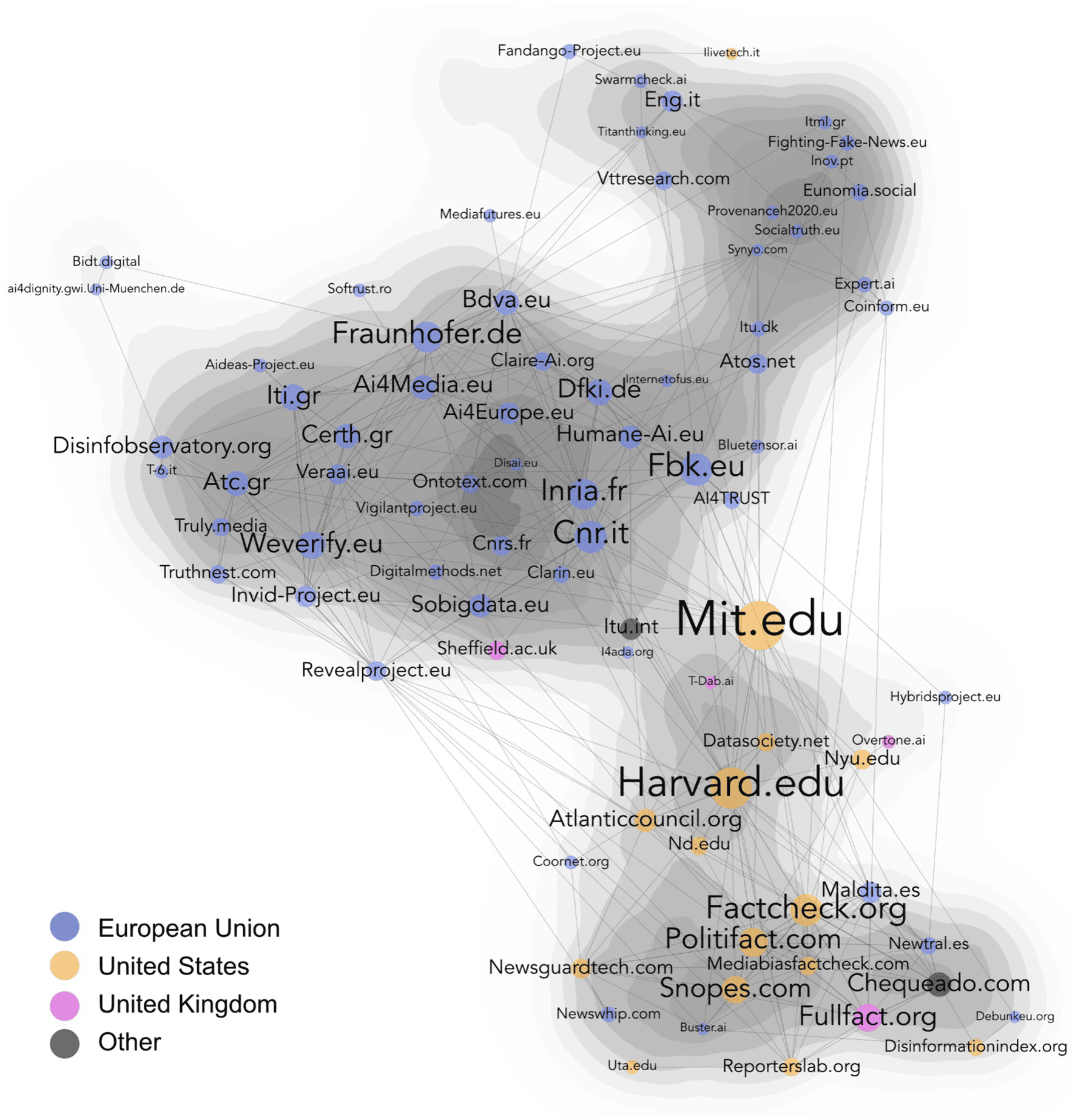

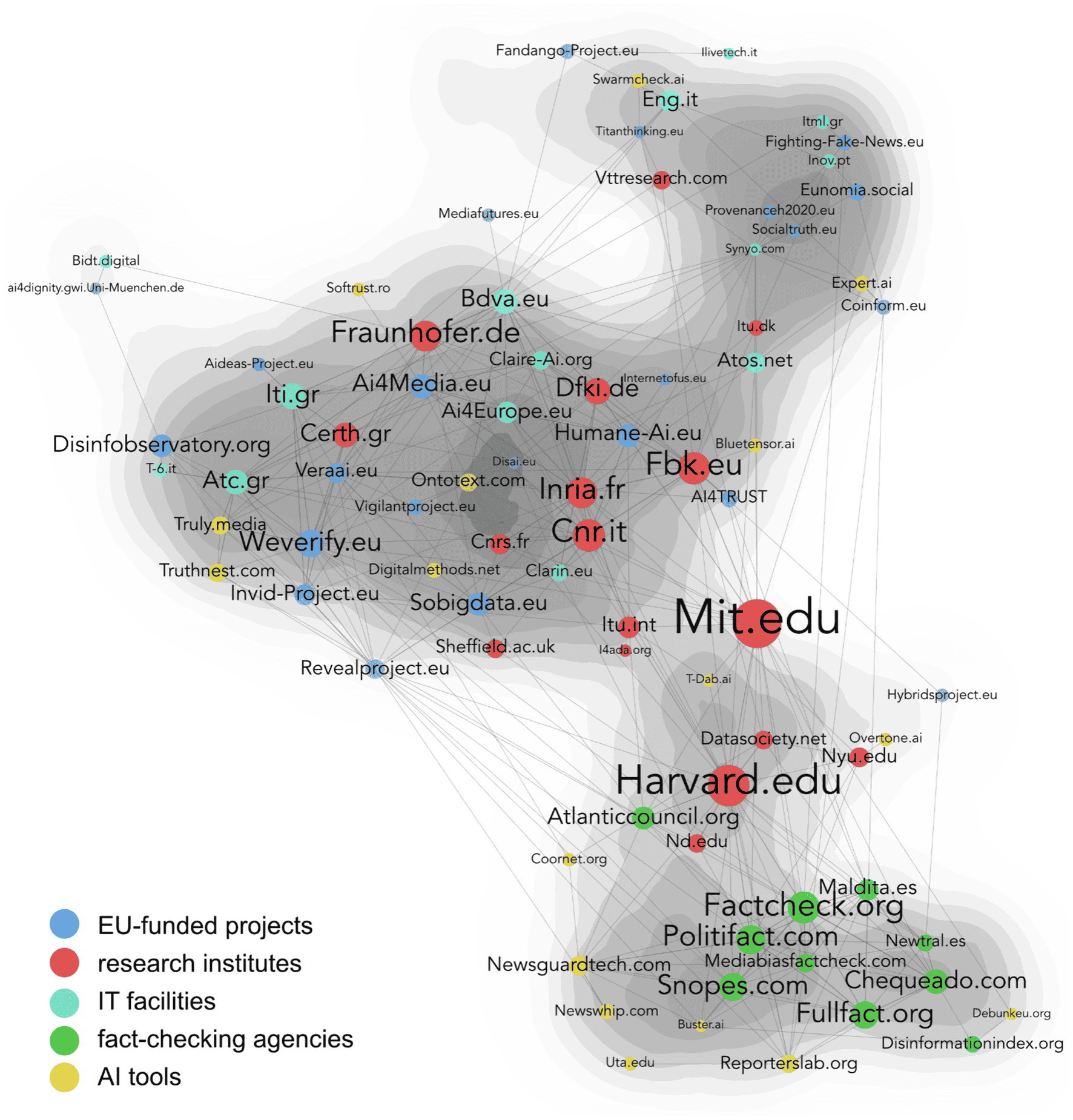

The resulting graphs contain 81 nodes and 393 links, and can be seen in the figures below. The relative position of nodes is determined by the strength (number of hyperlinks) and type (direct or indirect) of connections between nodes. Node density is visualised using a superimposed heat map, with darker grey gradients indicating high density and lighter gradients representing less dense areas.

Most of the included projects originate from the European Union or the United States.

The first visualisation (above) suggests that the counter-disinformation landscape consists of three interconnected clusters.

The largest cluster is located near the top and is dominated by European disinformation initiatives that are primarily associated with Horizon 2020 projects and European research institutes.

is the smallest and least densely populated. This cluster serves as a “transitional zone” in the network and is primarily composed of US-based research institutes and international think tanks.

The third cluster is located at the bottom of the map and mainly consists of established fact-checking agencies and AI tools, most of which are US-based.

AI initiatives against disinformation by category

These graphs allow us to make a few interesting observations about the different approaches that are taken in the European Union and United States.

Firstly, we see that European projects primarily involve large national research centres, while the US primarily involves research universities and .

Despite these differences, the strategies that are used within the EU and US are almost identical. Project websites from both areas describe AI tools as a human aid rather than a direct solution to the problem of disinformation, while primarily focusing on improving the quality of information rather than simply targeting the spread of disinformation.

Conversely, the bottom area, which mainly consists of fact-checking agencies and AI tools, is strongly influenced by US legislation and policies and clearly has a different objective: the AI tools in this area are primarily focused on verifying news and debunking information that has already been spread on digital platforms and other places.

In a nutshell, one might say that the first two clusters attempt to solve the disinformation problem “upstream” (prevention), while the third cluster fights disinformation “downstream” (remedy).

-

Most AI-based initiatives against disinformation originate from the EU and US

-

Academia mainly focuses on improving the quality of information, while fact-checking agencies attempt to debunk disinformation