Measuring programming experience

Software engineering studies often involve the measurement of variables. For example, a study could measure the effect of test-driven development on code quality and productivity.

Such studies typically also need to deal with so-called confounding variables, like programming experience, which can affect the outcome of surveys or experiments. If a study does not take the influence of confounding variables into account, its results could be severely biased.

Many software engineering studies involve human participants, so one would think that researchers always control for confounding variables like programming experience in their studies.

In reality this doesn’t always happen and when it does happen it is not always clear how it is done. Researchers also use different methods to control for programming experience. This makes it harder to interpret and compare results from such studies.

The goal of this study is therefore to evaluate how reliable different ways to measure programming experience are, so that we all know how we should do it from now on.

The first step towards that goal involves a systematic literature review on how researchers measure programming experience.

Based on the results of this review, the authors create a questionnaire that is designed to evaluate the efficacy of those measurements. This questionnaire consists of two parts:

- that existing studies have used to measure programming experience;

- Java programming tasks that participants need to complete.

It is assumed that participants with a higher amount of programming experience will solve more tasks correctly and will be able to complete more tasks within the given time.

The purpose of the study was only disclosed to participants after conclusion of the experiment.

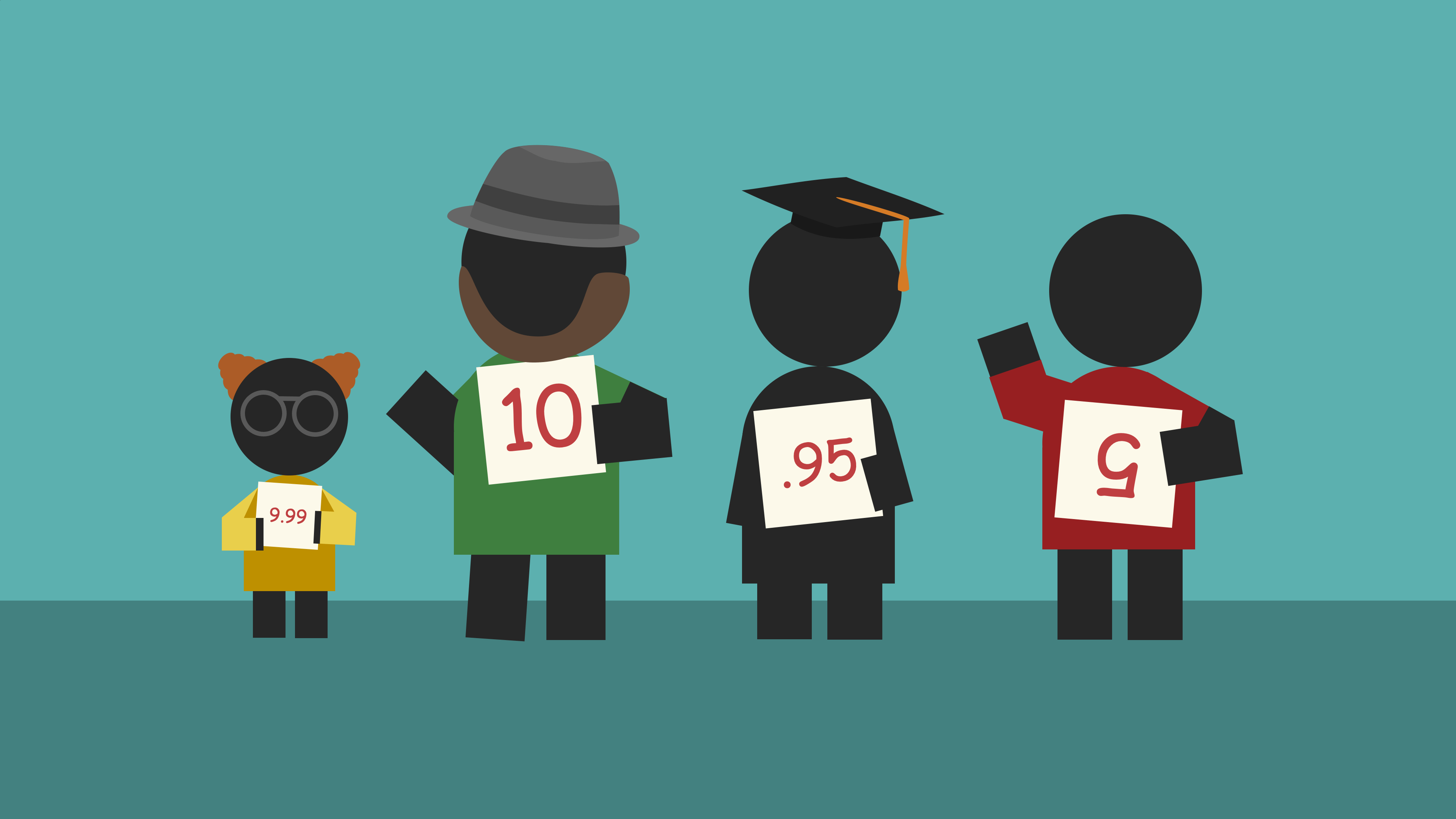

The literature review yields ways of managing programming experience:

- programming experience in number of years;

- level of education;

- self-estimation by participants, e.g. on a five-point scale;

- some unspecified questionnaire;

- the size of the (largest) programs that participants have written;

- performance on unspecified pre-tests that are conducted prior to the actual study;

- estimation by participants’ supervisors;

- experience is measured, but it is not specified how this happens;

- experience is not controlled for at all.

Based on these findings, the authors create the following questions for their questionnaire:

| Source | Question | Scale | Abbreviation |

|---|---|---|---|

| Self-estimation | On a scale from 1 to 10, how do you estimate your programming experience? | 1: very inexperienced to 10: very experienced | s.PE |

| How do you estimate your programming experience compared to experts with 20 years of practical experience? | 1: very inexperienced to 5: very experienced | s.Experts | |

| How do you estimate your programming experience compared to your class mates? | 1: very inexperienced to 5: very experienced | s.ClassMates | |

| How experienced are you with the following languages: Java/C/Haskell/Prolog | 1: very inexperienced to 5: very experienced | s.Java / s.C / s.Haskell / s.Prolog | |

| How many additional languages do you know (medium experience or better)? | Integer | s.NumLanguages | |

| How experienced are you with the following programming paradigms: functional/imperative/logical/object-oriented programming? | 1: very inexperienced to 5: very experienced | s.Functional / s.Imperative / s.Logical / s.ObjectOriented | |

| Years | For how many years have you been programming? | Integer | y.Prog |

| For how many years have you been programming for larger software projects, e.g. in a company? | Integer | y.ProgProf | |

| Education | What year did you enroll at university? | Integer | e.Years |

| How many courses did you take in which you had to implement source code? | Integer | e.Courses | |

| Size | How large were the professional projects typically? | NA, <900, 900-40000, >40000 | z.Size |

| Other | How old are you? | Integer | o.Age |

Most questions should speak for themselves, although a few might be a bit unclear without context:

-

The languages Java, C, Haskell and Prolog were chosen ;

-

s.NumLanguagesshould only include languages for which one has at least “medium experience”, but the paper never explains what that means; -

e.Yearsrequires a conversion from a year (e.g. 2019) to the number of years in which a participant was enrolled (e.g. 2); -

z.Sizerefers to the number of lines of code.

The authors find small to strong Spearman rank correlations for about half of the questions. These are listed in the table below (ρ), along with . Bold values denote significant correlations (p < .05).

| Question | ρ | N |

|---|---|---|

s.PE |

.539 | 70 |

s.Experts |

.292 | 126 |

s.ClassMates |

.403 | 127 |

s.Java |

.277 | 124 |

s.C |

.057 | 127 |

s.Haskell |

.252 | 128 |

s.Prolog |

.186 | 128 |

s.NumLanguages |

.182 | 118 |

s.Functional |

.238 | 127 |

s.Imperative |

.244 | 128 |

s.Logical |

.128 | 126 |

s.ObjectOriented |

.354 | 127 |

y.Prog |

.359 | 123 |

y.ProgProf |

.004 | 127 |

e.Years |

-.058 | 126 |

e.Courses |

.135 | 123 |

z.Size |

-.108 | 128 |

o.Age |

-.116 | 128 |

The authors further explore their data using stepwise regression and exploratory factor analysis.

To determine which questions are the best indicators of programming experience, one can start by looking at questions with at least a medium correlation with the number of correctly solved tasks. However, questions can also be correlated with each other. If we do not take this into account, we would overestimate the importance of these questions.

This is where stepwise regression comes in, which helps you create the smallest model that can explain the number of correctly solved tasks.

The authors conclude that s.Logical and s.ClassMates can be used to explain

24.1% of the variance in the number of correct answers and are thus the most

important questions.

| Question | Beta | t | p |

|---|---|---|---|

s.ClassMates |

.441 | 3.219 | .002 |

s.Logical |

.286 | 2.241 | .030 |

One might wonder why s.Logical is more important than s.Java, which is the

language used for the programming tasks. A possible explanation is that Java is

a “beginner language” that all participants know relatively well, while only

those who actively pursue logical programming will be very familiar with logical

programming languages.

Exploratory factor analysis can be used to reduce the number of variables to a smaller number of underlying latent variables or factors, which are easier to reason about. It works by identifying groups of variables that correlate with each other.

The authors extracted five factors that affect programming experience:

-

experience with mainstream languages (

s.C,s.ObjectOriented,s.Imperative,s.Experts, ands.Java); -

professional experience (

y.ProgProf,z.Size,s.NumLanguages,s.ClassMates); -

functional experience (

s.Functional,s.Haskell); -

experience from education (

e.Courses,e.Years,y.Prog); and -

logical experience (

s.Logical,s.Prolog).

The authors make the following recommendations in their paper.

-

Report precisely which measures you use to control for programming experience

-

Use self-estimation questions to judge programming experience among undergraduate students

-

Combine multiple questions whenever possible. Some may serve as control questions to see whether subjects answered honestly

-

Use

s.ClassMatesands.Logical, as these were most capable of predicting the number of correct tasks in the authors’ experiments -

The identified factors for programming experience are useful for developing a theory on programming experience