Characteristics of useful code reviews: An empirical study at Microsoft

Last week, we’ve learnt that reviewing and discussing code is a good way to catch bugs. That obviously doesn’t mean you should meticulously review and start arguments about every single line of code – but what should you do then? This week I discuss a paper by Bosu, Greiler, and Bird that explains what makes code reviews useful.

The following summary is loosely based on the second half of Code reviewing reviewed: recommendations for improving the efficiency and effectiveness of modern code reviews, an essay that I originally wrote for the Open University of the Netherlands.

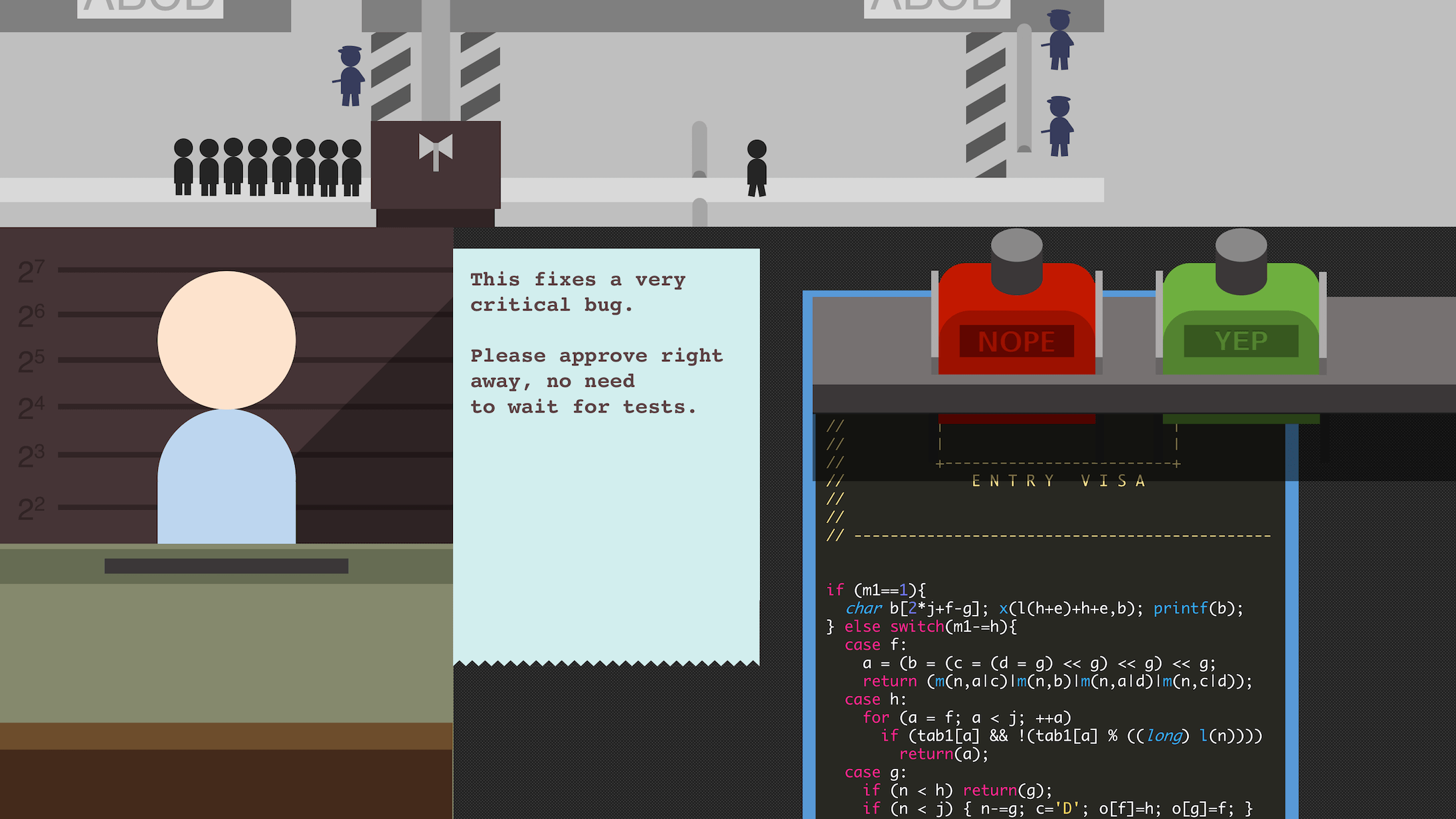

Developers spend a considerable amount of time reviewing each others’ code. The idea is that these comments can help improve the quality of the software. However, some comments are left addressed because the developer doesn’t find them useful. Such comments are kind of a waste of time, so it would be good if we could avoid them whenever possible.

The study consists of three phases.

The authors started with an explanatory study in which they held interviews with developers. Each developer was asked to classify a series of review comments as “useful” or “not useful”, and to categorise them according to one of several pre-defined comment types.

Based on these findings, the authors identified attributes that often only occur with comments that are either “useful” or “not useful”. These attributes were used to that retroactively labels comments as “useful” or “not useful” by examining the comment text, their approval status, and code changes that were possibly made because of those comments.

Finally, the classifier was run on a large set of reviews to determine how the characteristics of reviewers and the reviewed code changes affect the proportion of useful comments within reviews.

I summarise the results for each of the three phases separately.

Comments that point out flaws in the code, unhandled edge cases, or refer (junior) developers to particular APIs are generally found to be useful.

Slightly less useful are comments about things that don’t affect the functionality of the current version. Examples are post hoc comments about some alternative implementation method that could have been used instead and remarks about adherence to a coding style.

Finally, , compliments, and comments that falsely point out issues or point out issues which are not part of the reviewed change are simply “not useful”.

The few findings that are noteworthy for this phase are:

-

Comments that trigger changes by the original author are more likely to be found useful;

-

Comments that elicit .

Reviewers who have reviewed or modified a file at least once tend to provide more useful comments than those who haven’t. The proportion of useful comments generally increases up to 80% when the same file is reviewed for the fifth time, although some minor fluctuations can still occasionally happen.

What this means is that new team members should participate in code reviews as early as possible, so they can get acquainted with the code. At the same time, it’s also important to make sure that code is also reviewed by at least one experienced reviewer to maintain a decent proportion of useful comments.

About three-quarters of review comments in the dataset were made by reviewers who were part of the same team as the developer who proposed the change. Curiously enough, the proportion of useful comments is slightly higher (<1.5%) for cross-team reviewers.

The proportion of useful comments is also affected by the size of the change for which the review is requested: reviewers are more likely to overlook changes and/or ask questions to understand the code if many lines of code were changed simultaneously.

Finally, build and configuration files also tend to elicit more comments that aren’t “useful”: it is believed that their complexity makes it hard to properly assess the impact of changes within them.

-

Useful comments are those that are related to the change and that the developer can directly act on

-

Experienced reviewers provide more useful comments than inexperienced reviewers

-

Proposed changes should be kept as small as possible to keep them easily reviewable

-

Changes in non-code files should always be accompanied by an explanation of the “what” and “why”