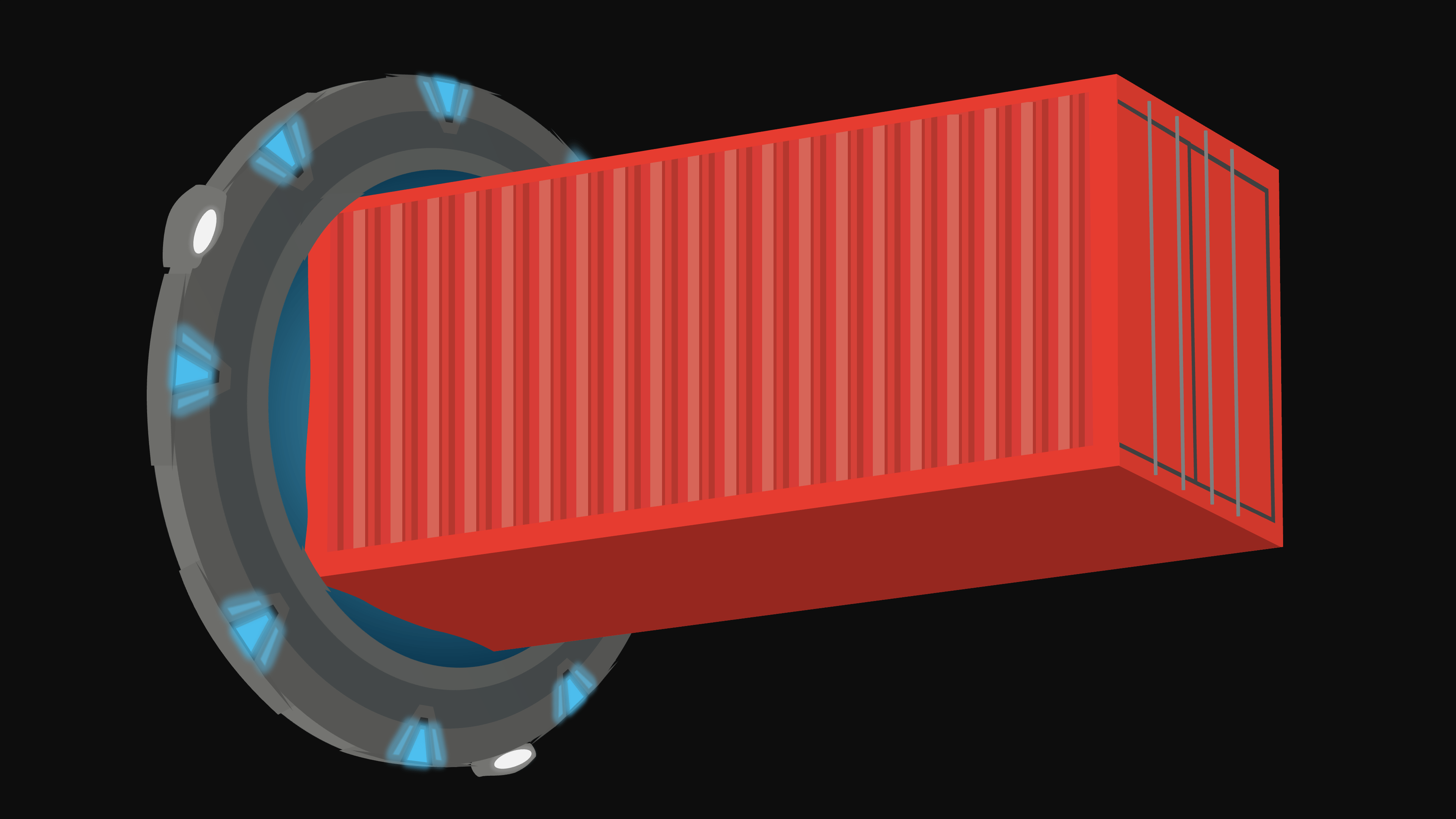

Cargo-cult containerisation

As we all know, all problems in computer science can be solved by adding yet another level of indirection. Deployments are a good example of such a problem.

Nowadays a typical web service is (1) written in a programming language that runs in a virtual machine (such as Python or Java), which is then (2) dockerised and (3) deployed using a container orchestration system like Kubernetes, which often (4) runs in virtual machines that are rented from a cloud hosting provider, such as AWS. In turn, these providers may also use multiple levels of virtualisation that are invisible to their users.

This is not necessarily bad: containerisation is useful, in the sense that it solves real problems. But it can also introduce problems, especially since this multi-layered stack has become the de facto model for software development and operations – even for simple applications that don’t need all these layers. It almost sounds like… cargo cult programming?

Let’s start with the good parts of containerisation, because it’s popular for a reason. Using containerisation offers several benefits, the most important of which are related to:

-

Architecture: Systems are no longer written for specific physical computing architectures. Instead, systems are built out of containers that can be deployed flexibly into different types of environments. Dependencies and other parts of the environment can be fully controlled by developers, and each container can evolve and be deployed independently from other containers.

-

Design: Containers make it easy to split development work among different sub-teams. Each sub-team only needs to worry about one container (image), for which they can freely choose their preferred programming language, frameworks, libraries, tools, and runtime. Moreover, because containers can be deployed virtually anywhere, they can be shared with other sub-teams or used for experiments in the design phase.

-

Data persistence: Since each container is responsible for its own data, inter-dependencies between subsystems can be more easily avoided. This makes it possible for different containers to independently recover from possible faults, and removes the need to agree on a globally shared data format.

-

Testing: Containers make it easy to test subsystems independently from each other in an automated fashion.

-

Deployment: Established trends, like microservices and continuous integration, delivery and deployment, encourage the use of separate containers to remove dependencies between sub-teams. Moreover, orchestration systems such as Kubernetes make it easy to do things that would have been incredibly complicated to configure for traditional physical computing platforms, like scaling for unpredictable workloads and fail-over clustering.

-

Runtime: Containers can be started, stopped, reset, and restarted independently from each other. This makes it easier to fix issues that only occur in a particular subsystem and makes it dead simple to deploy and test combinations of different versions of subsystems.

-

Performance: Containerisation usually reduces system performance to some extent, because all those layers aren’t free. In return, containers make it possible to optimise the behaviour of the system as a whole. Various parts of a system can be scaled independently from each other, based on usage or other infrastructure-level and application-level key indicators.

-

Security: Because containers are meant to be self-contained, they are more secure than applications that are deployed directly to a server.

Overall, containerisation is largely driven by developer convenience. It enables developers and teams to work on large-scale software systems with considerably less manpower than was required using traditional methods.

As we saw above, containerisation has a lot of benefits when it’s used sensibly. However, over-reaching use of containers can have negative effects:

-

Architecture: Containerisation has become so prevalent that for some it has become a substitute for software architecture and design, even though containers are technically just a lightweight virtualisation technology that doesn’t really guarantee a meaningful software architecture or design.

Moreover, as containerisation reduces the need for communication between sub-teams, each team may treat their container as “their” sandbox and add new things to it without considering the impact on the architecture of the systems as a whole.

-

Design: Not having to know the environment in which a subsystem runs can spur opportunistic reuse, i.e. when components are picked for reuse with a deeper understanding of the components or how and when they are meant to be used.

What’s more, even though teams may work independently on different parts of the system, they often still need shared functionality. Containerisation and the practices that come with it – like the reduced need for communication – make it harder to follow the same conventions and practices.

-

Data persistence: Special attention needs to be paid to data that’s meant to be stored permanently. By design, all data inside a container disappears forever when it shuts down, unless it’s stored externally. Common solutions like Docker volumes can be complex and fragile.

-

Testing: Because each container evolves independently, the eventual system may consist of any valid combination of compatible versions. Testing all these possible combinations is prohibitively expensive, so the most natural way to test end-to-end system integration is to simply go live with the production system.

-

Deployment: Containerisation makes it easy to automate deployments to different types of environments. This has led to the demise of traditional IT departments (which can be seen as a good thing), but also increases competency requirements for the development team. It doesn’t help that many orchestration system are often too complex and powerful for what teams actually need.

-

Runtime: Containers have to be monitored for performance, availability, and security issues. When used in conjunction with an orchestration system like Kubernetes, developers must be familiar with tools that are specific to that orchestration system, which further increases the educational needs for developers.

-

Performance: Even lightweight virtualisation can have a noticeable effect on performance. Memory footprint can be increased significantly when containers introduce different versions of a runtime and middleware.

-

Security: Containers provide less security than traditional operating system virtualisation (but still more than without virtualisation). Additionally, the container registry, Docker daemon and host operating system also need to be secured, and assurances must be in place to guarantee that the container image can be trusted.

-

Graphical applications: Docker and similar containerisation technologies were designed for server applications that don’t come with a (native) graphical interface. , but those solutions are clunky at best.

- Containerisation is fine, just don’t overdo it