Finding the most common words in a set of texts for a word cloud

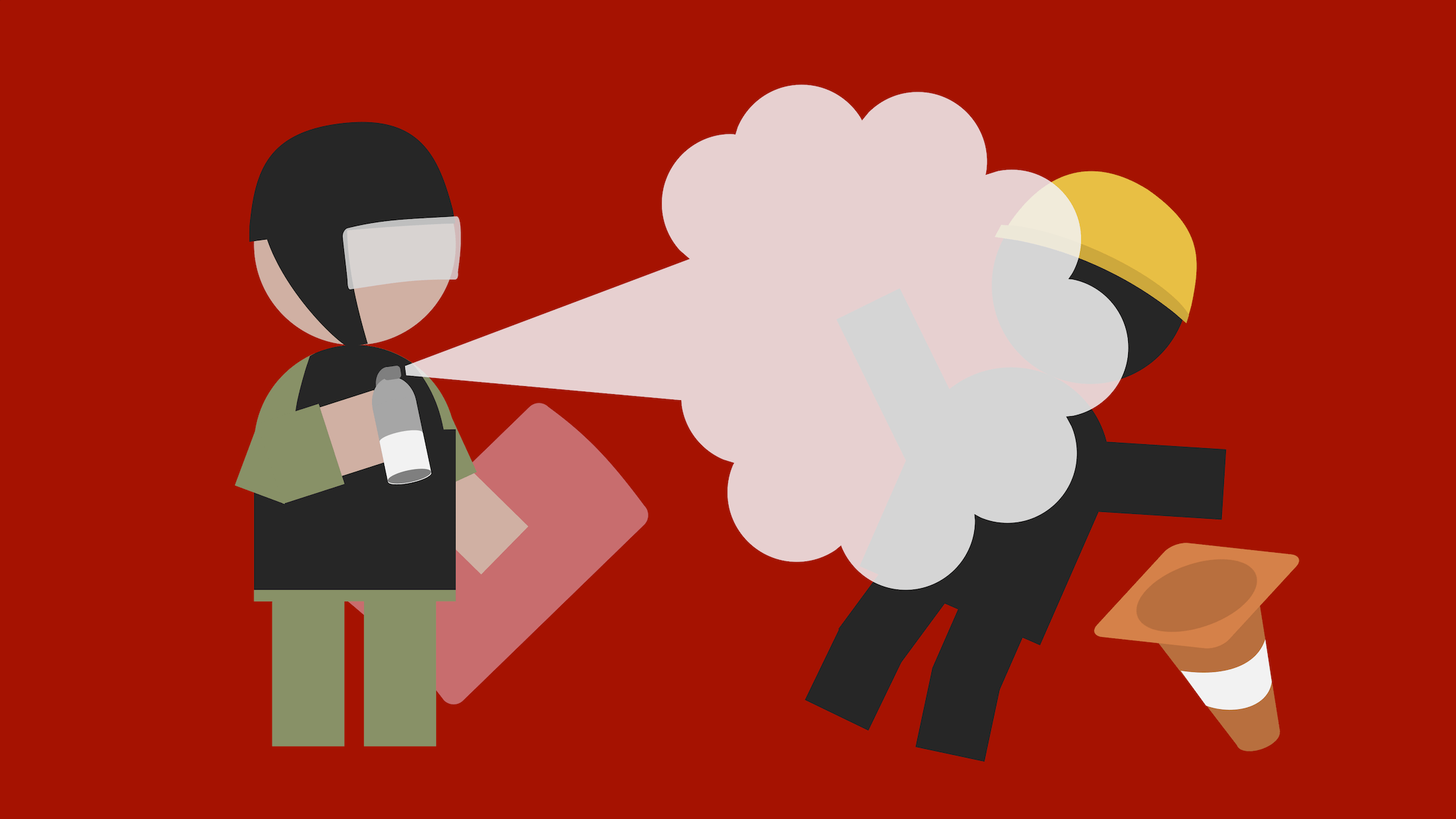

Suppose that someone walks over to your desk and asks you to create a word cloud from some news articles. The sensible thing to do here would be to tell them that “word clouds are very 2006 and people who still use them should be shot”. You don’t want to come off as rude however, so you agree to help them.

The word cloud should be based on the 10 most common words in this small, totally-not-politically-motivated selection of BBC news articles:

- Hong Kong police 'pushed to the limit'

- ‘I don’t have any hope for my future in Hong Kong’

- Hong Kong: Hecklers dragged out in new parliament chaos

- Hong Kong: Petrol bombs tossed at police in latest protest

There’s really simple *nix solution from the 80s that gives you the 10 most common words

(any sequence of alphabetic characters a-z) in some texts:

It was originally published by Doug McIlroy , and does the following:

- Take all

txtfiles in a directory and combine their contents into a single text by putting them after each other; - Collapse all whitespace and convert the text so that each word appears on its own line;

- Convert everything to lower case, so we don’t need to deal with upper and lower case versions separately;

- Sort the words so that duplicates are all in consecutive lines;

- Deduplicate the lines and count how often they occur;

- Sort the word counts in reverse, numeric order;

- Quit when 10 results have been printed.

McIlroy’s script technically does exactly what we’ve asked for – finding the 10 most common alphabetic character sequences – but it’s clearly not what we actually wanted:

The list is topped by so-called stop words that occur often in any text and therefore don’t tell you very much about these specific articles.

We can of course choose to simply omit these words from our results. There are some ready-made stop lists on the Internet that you can use, but it’s also possible to roll your own.

Here’s a that

uses sed to remove a few common stop words from the output:

Note that this won’t work if you want to create word clouds for something like the lyrics for The Beatles’s “Let it be”, which largely consists of stop words. It’ll work well enough for most other texts however.

We can see that our revised script already yields a slightly more useful list, even though we’ve excluded only 10 stop words:

We can improve the quality of our results by using a larger stop list. Unfortunately, stop words aren’t our only problem.

Our script output shows that three most common “words” in our text are “Hong”, “Kong”, and “s”. But wait, none of these are actual words!

So what went wrong here? In the second line we assumed that words are non-empty

sequences of alphabetical characters (a-z). But in practice it’s a bit more

complicated than that.

What we need for our word cloud aren’t necessarily words, but tokens: non-empty sequences of characters that together form a single linguistic building block. Most of the times these tokens will simply be words, although there are also many, many edge cases that make proper tokenisation a non-trivial task:

-

Some words, like “naïve” and “encyclopædia” contain characters with diacritical marks or other characters that are not within the

a-zrange. -

Loan words like “au pair” and names like “Hong Kong” or “Chun Fei” sometimes contain spaces, but should be treated as a single token.

-

Some languages have the opposite problem. In Dutch and German for example compound nouns are written without spaces, so you’d end up with tokens that look like “graphicaluserinterfacedevelopmentkit”. And then there are also East-Asian languages like Chinese, which do not have any spaces at all – good luck with that!

-

If you attempt to split contractions like “that’s” and “it’s”, you end up with meaningless tokens like “s”. The same would happen with abbreviations.

-

Words can contain hyphens. “Co-founder” for example, should be seen as a single token. However, the same cannot be said of “totally-not-politically-motivated”, which is best seen as four words that happen to be connected to each other using hyphens.

-

Tokens can contain other “weird” characters. Some examples include:

- product names, e.g. C#;

- URLs, e.g. “https://www.bbc.com/aboutthebbc”;

- email addresses, e.g. “[email protected]”; and

- phone numbers, e.g. “(123) 456-7890”.

Proper tokenisation is a hard problem that’s still being researched, but let’s assume that you’ve mostly fixed the tokenisation issues to some degree using heuristics, machine learning, or a combination thereof.

You’ll quickly run into another issue: there are many similar tokens! These tokens usually fall into one of the following categories:

- verbs, e.g. “protest”, “protests”, “protesting”, and “protested”;

- nouns, e.g. “authority”, “authorities”, “authority’s”.

Sometimes you want to treat different verb or noun forms differently, but most of the time you don’t really care and just want to merge all occurrences into a single form.

This process of merging tokens is called normalisation. There are two popular normalisation techniques that you can use: stemming and lemmatisation.

Stemming is a crude – but fairly effective – way to normalise words. It works by simply chopping off the ends of words based the idea that many words have similar word endings that don’t necessarily add a lot of information to their meaning: for a word cloud it’s totally fine to substitute “protesting” for “protest” for example.

Most people use one of two stemming algorithms. The Porter stemming algorithm has traditionally been the most popular algorithm for English, although nowadays you might want to use the Snowball stemming algorithm instead, as it supports more languages and gives slightly better results.

Here’s a snippet that demonstrates how you can stem words using the Natural Language Toolkit for Python:

It clearly isn’t perfect: blunt removal of characters from a word will sometimes leave you with , so we need to find a way to map stemmed words back to real words.

The other method, lemmatisation, is more sophisticated and is based on the idea that you always look up words in dictionaries by their lemma. This means that we can normalise words by converting them to lemmas.

Obviously this requires that you have a complete dictionary in the first place and a way to figure out which lemmas belong to which words, which makes it much harder to implement.

Fortunately there’s no need to do that, as the Natural Language Toolkit also comes with a lemmatizer. It clearly produces better results than the stemmer:

If we now apply the lemmatiser on the original list of most common words and remove all stop words that are common in the English language, we might end up with a list like this:

It’s far from perfect: “Hong” and “Kong” are still listed as separate words (with different numbers of appearances!) and we may want to treat “said” as a stop word for news articles, since they frequently include quotes and paraphrases.

It’s good enough for us however. Because let’s be serious: no one’s going to look at a stupid word cloud in 2019.

-

Stop words are words that occur very often in natural languages, but don’t tell you much about what a text is about

-

Tokenisation is the process of splitting a single text into a sequence of tokens (≈words). It’s kind of hard to do well

-

Stemming and lemmatisation are normalisation techniques that can help you group words with similar meanings